This is an essay based on a talk, which I gave in February 2013 to entertain colleagues and friends.

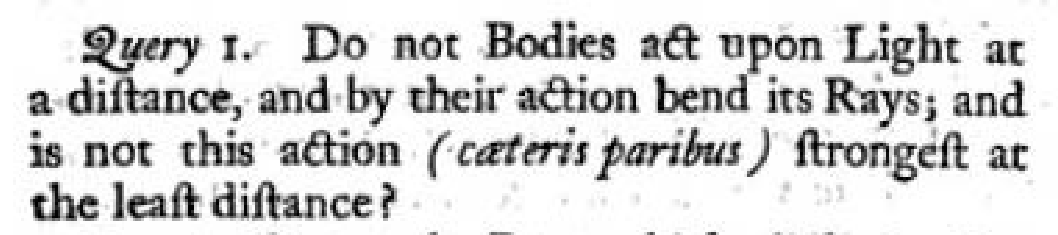

Imagine Newton at age sixty, recognized by his contemporaries as the lion among them, even the ones who don't like him. He is still very active, but probably realizing that he is not going to solve everything he wants to in one lifetime. And he writes this book on light, called Opticks, and at the end he puts these Queries, sort of like problems for future generations.

Future generations did pay attention to Query 1, and the person most remembered now for doing so was Soldner, a century later. Soldner knew that fast moving particles near the Sun don't go into orbit, they only get slightly deflected. And he calculated how much something moving at the speed of light would be deflected in Newtonian gravity, and says: look it's very small but measurable. The idea is: pick a star, like Regulus in Leo, which goes behind the Sun. First take good observations of Leo during winter nights. Then observe Leo in August, during the day, when Regulus is at the rim of the Sun. Regulus should appear slightly displaced, relative to rest of Leo, because of light deflection. Soldner is so close to doing the experiment in 1801. But then he gives up, saying you'll never see stars at the rim of the Sun. You read his paper now, and you're like -- man, haven't you heard of eclipses? But nobody picks up on this. Through the nineteenth century, physicists gradually unravel electricity and magnetism. Light is a wave, of alternating electric and magnetic fields, why should gravity do anything to it?

Until eventually, a certain Albert Einstein finds the answer to Newton's question. Yes, he said, light is deflected by gravity, but twice as much as you'd guess in Newton's theory. And by the way, said Einstein: light is both a particle and a wave.

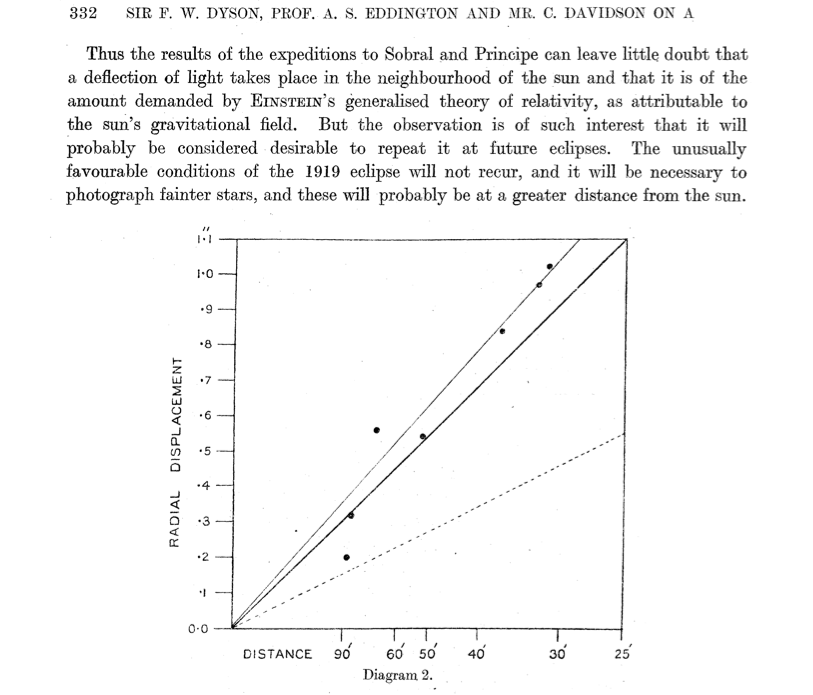

In 1919 Eddington and friends finally did the experiment Soldner never did. And here's their result:

Newtonian theory boo, Einsteinian theory yay. At least, that's how the newspapers presented it, and Einstein became a household name.

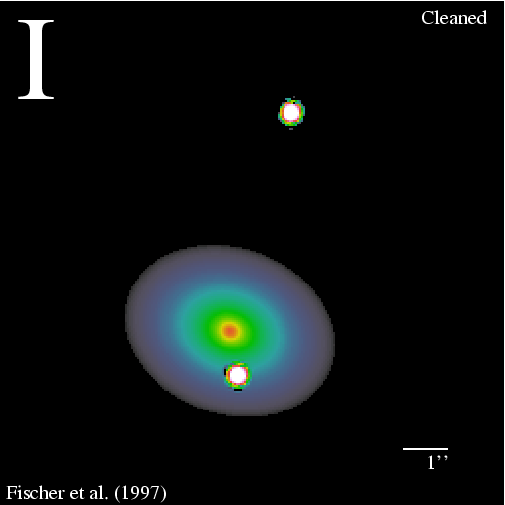

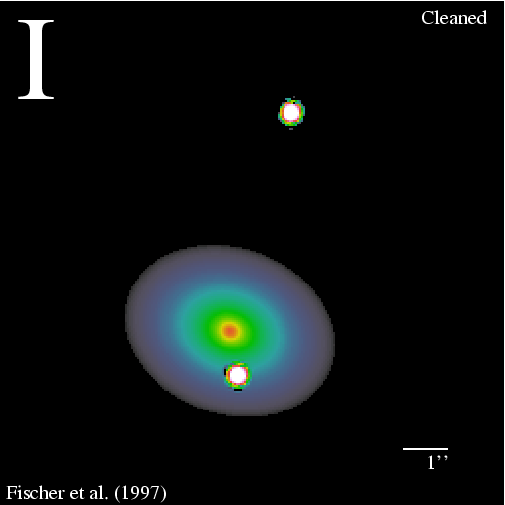

It would be sixty years before light deflection made the mass media again. In the 1970s the forerunners of today's digital cameras got put on telescopes, and astronomers could image much much fainter things. And here's what they saw.

|

|

The images weren't so pretty yet -- these are from the Hubble telescope much later -- but the essentials could be seen. This is a light-deflection experiment on a much much larger scale. Instead of the Sun, we have a galaxy maybe a billion light years away. Instead of a star we have a quasar several billion light-years away. The gravity of the whole galaxy pulls the light so much that some of it comes out the other side. We can see two images of the quasar, or even four. These are now called gravitational lenses. They are not common, because you need a bright thing like a quasar almost directly behind a massive thing like a galaxy. But a few hundred have been found scattered around the sky. These were the first two. There's another thing. The quasar here varies in intensity. So sometimes this image varies in intensity over a period of days. Then about a year later, well 59 weeks later, the other image does the same. The light travel time is billions of years, but this one is 59 weeks longer. But only a few percent of lenses does it happen that the source is so conveniently variable.

In order to understand these mirages, we really need to delve into Einstein's theory a little bit. And the central idea is actually not unfamiliar to us.

Hawaii is south of Zürich, right? But to fly there, the shortest route takes you over Greenland.

And Cape Town to Auckland takes you over Antarctica.

These flight-path curves are called geodesics. It's easy to move along a geodesic: you just go in some direction and keep going. These South Africans on their way to New Zealand will see themselves as flying straight. It's only the map that tells them they go SE first and then NE. All because the Earth's surface is curved.

Now, if you're in orbit or just coasting through space, you don't feel any gravitational force pull you. Like those South Africans on the plane, you seem to be just moving in a straight line. It's only the outside world that thinks you are being pulled by something. Einstein thought a lot about scenarios like this, and then said: maybe gravity isn't really a force at all, maybe it's space and time getting curved. And he called it general relativity.

In general relativity, both space and time can get curved. We mostly only notice the curvature in time, because we don't move very much in space. And Newtonian gravity comes out as an approximate theory of time curvature. But light moves a lot in space, so it notices space curvature too. That's why gravity is stronger for light in Einstein's theory than in Newton's.

So Newton posed the question of light and gravity and Einstein answered it. Is there really anything more to say? Yes, we haven't heard from Darwin yet!

What on Earth is Charles Darwin doing, writing about gravity, a hundred years after the Origin of Species?! Well, this is Charles Darwin's grandson, a very fine mathematical physicist. This paper could have been called light near a black hole, but the term black hole didn't exist yet.

Here is an animation by Raymond Angélil of a clock near a black hole, sending out light pulses to a distant observer

A slightly technical point: is it valid to represent photons as dots? What about interference? Well, I think it's ok to represent photons as dots if the observer's time resolution is much coarser than the coherence times of the light, and that is the regime we expect to be in, in the foreseeable future. One can calculate interference and quantum-optical effects: the most fascinating is Hawking radiation, which is a black hole glowing very very faintly. But we don't have an idea of where to begin to look for something like that, so we'll leave that topic, regretfully.

Now let's look at another scenario. This time, we start a bunch of photons some way away from the black hole. Some will fall in: I've omitted those from the animation altogether, leaving this gap. The others will travel past the black hole, getting deflected.

Let's add more photons, so it's like setting of a light flash. The observer somewhere on the rim will see the photons coming from two different directions, and then see the whole thing again, but fainter.

Seeing that echo would be cool, but it's not really feasible yet. The situation one can observe is more like this.

You have some mass, not necessarily a black hole, and you can see two images. If the mass isn't round, you may see more images.

The next step is going from a general understanding of why you get multiple images to actually modelling the observations. That is say, figuring out what the gravitational field is, and what kind of mass distribution in these lensing galaxies would produce it. I'd like to explain one way of going about that, not the only way, but a very intuitive one. That's using Fermat's principle. Fermat's principle, in modern form, is that light is like an airline company: it wants to get from a light source to an observer, and it finds a geodesic to take it. If there's more than one geodesic, it takes them all.

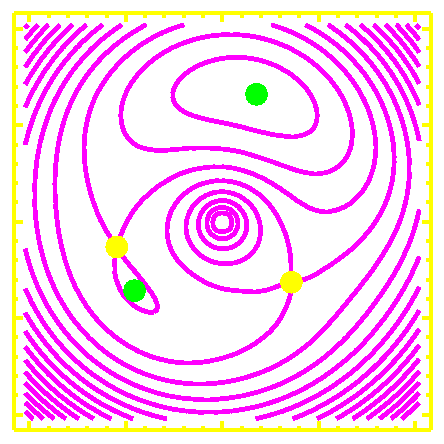

Let's go back to those light-flash animations and do a thought experiment --- you couldn't really do this, it's just useful to think about.

Let's freeze this blue flash, and send this yellow light flash out from the observer. When the yellow crosses the blue, we draw a magenta dot here. The horizontal axis labels the yellow flash according to the initial outward angle. The vertical axis is the time the yellow crosses the blue. Now, let's imagine the blue photons, instead of freezing, carry on in the direction the yellow was coming from. The right figure would be the arrival time as a function of direction. It turns out, this magenta thing is straightforward to calculate. But it's not a physical quantity. Because photons are not going to jump from one flash to the other. Except, when a yellow comes from the direction the blue was going in anyway. And when is that? When blue and yellow are tangential. Which is when this magenta becomes horizontal. These horizontal points represent when the light flash arrives, and from which direction. The rest is just scaffolding.

This whole animation is, of course, just a slice. We need to rotate the blue about the horizontal axis, and the yellow about another axis. And this magenta will become a surface, with a minimum here, a maximum here, and this one will actually be saddle point.

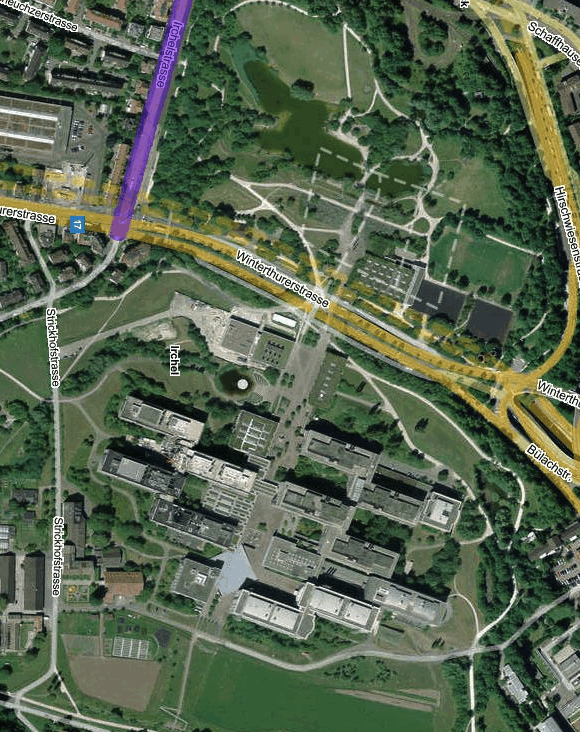

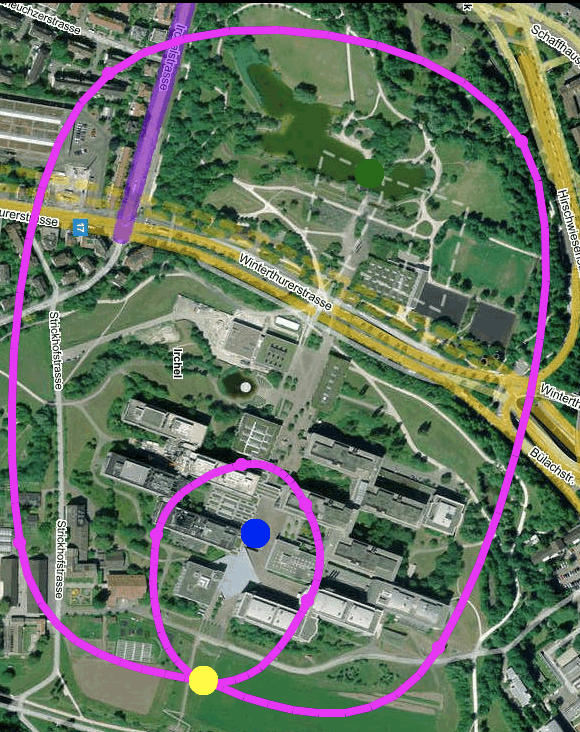

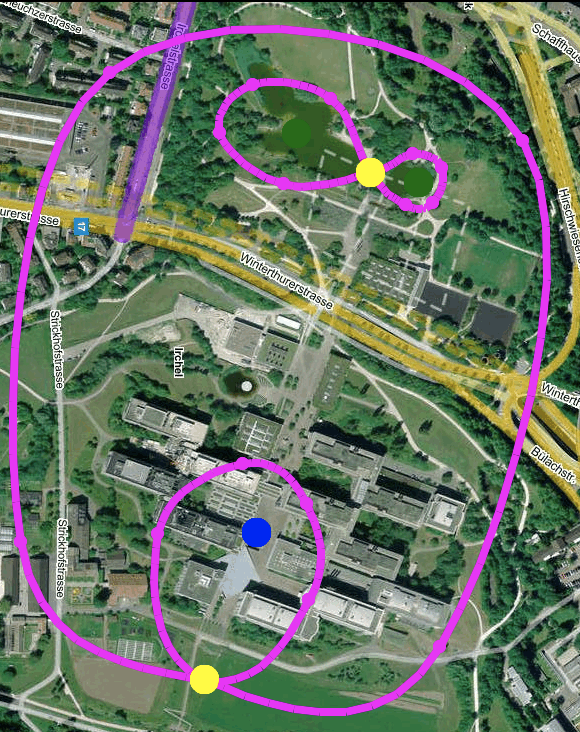

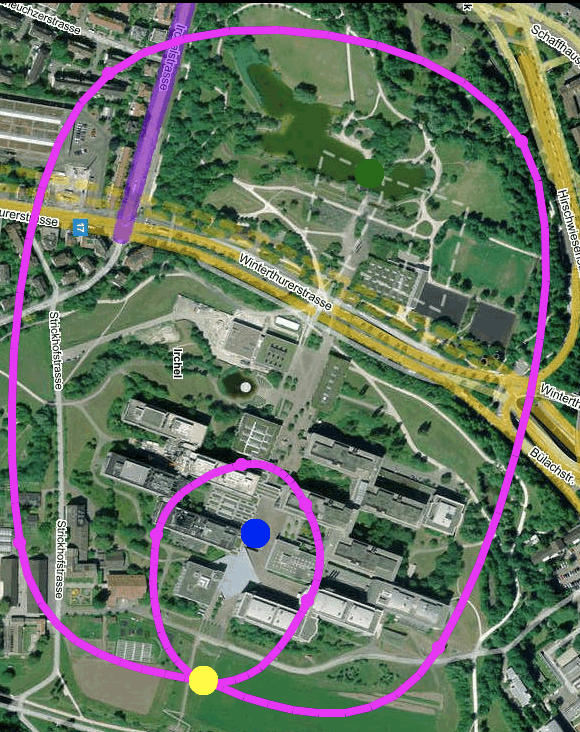

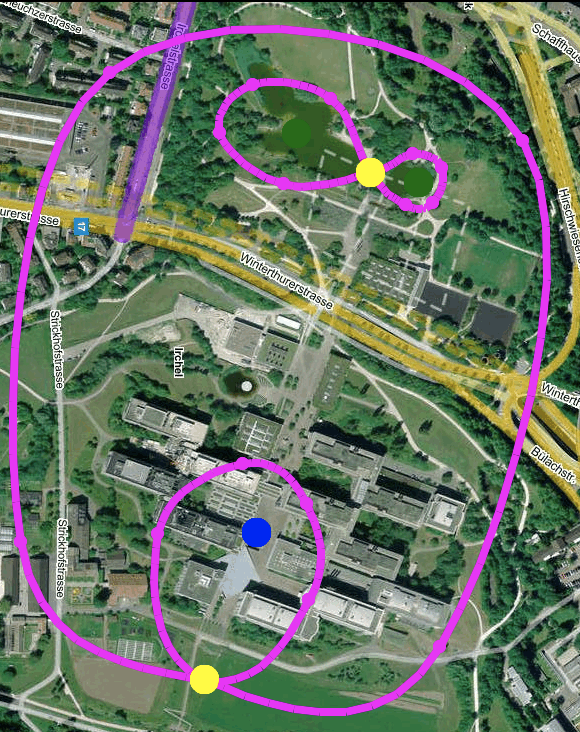

OK, let's get more concrete. This is Irchel campus, with North to the right.

What's the topography? The lowest point will be, say, at the pond here. The highest point will be among the buildings. What will the elevation contours look like? Well, there will be one contour that crosses itself, and the crossing point will be a saddle point.

From the saddle point, the elevation goes up forward and back, and down sideways.

It can get more interesting. The pond is actually a double pond, with a kind of isthmus in between.

So it's two minima with a saddle in between. The nice thing is that you can characterize any topographic map using just these two kinds of contours. In particular, the arrival-time surface for photons.

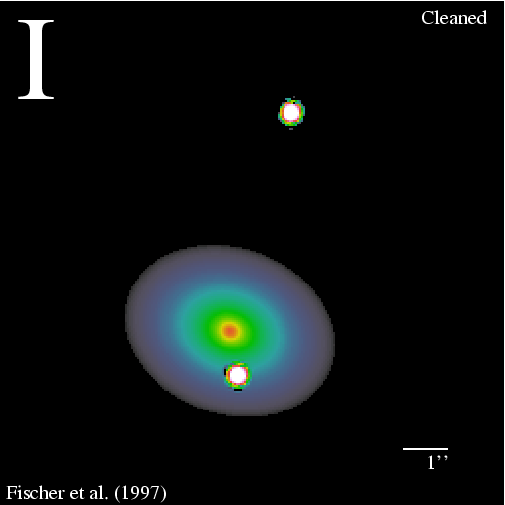

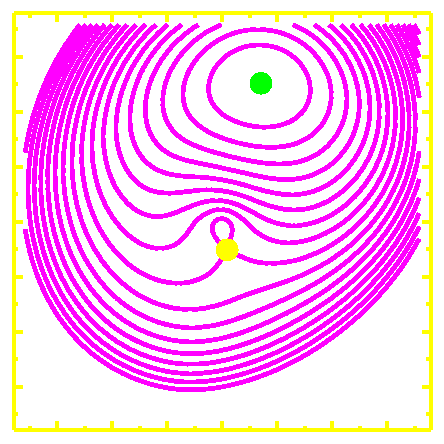

So here's that double-image quasar, the first gravitational lens to be discovered.

|

|

|

Alongside it is the arrival-time surface, according to a detailed model. Remember the surface is just an artificial construct -- we can only observe images at the minimum here and the saddle-point here. Qualitatively, it's rather like Irchel campus.

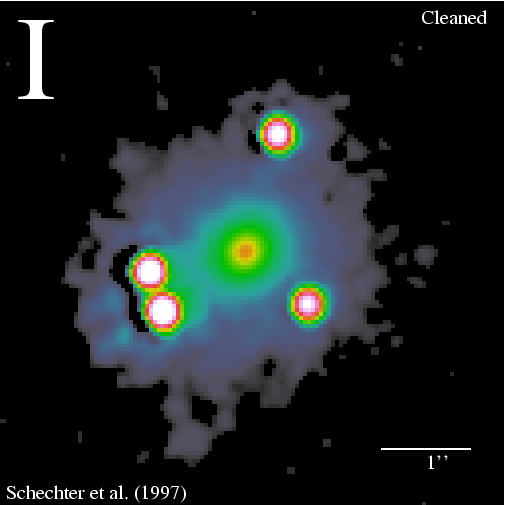

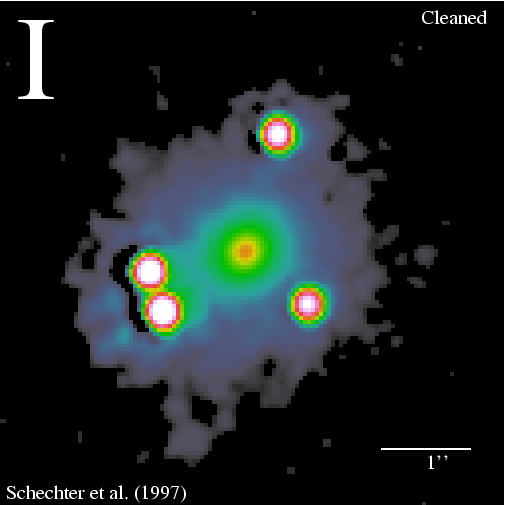

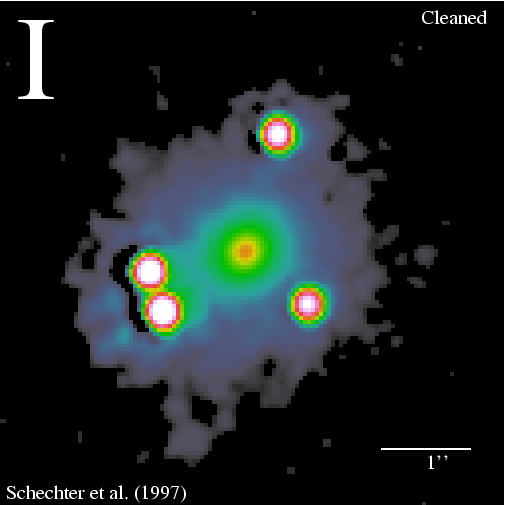

The first four-image system, we have two minima and two saddle points.

|

|

|

The topography of the Irchel campus doesn't look so similar, but if you imagine the pond stretching down to the left, it would be.

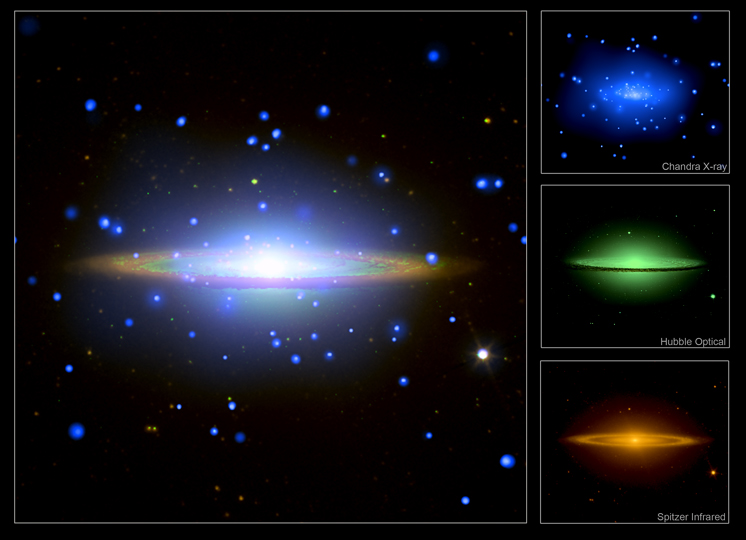

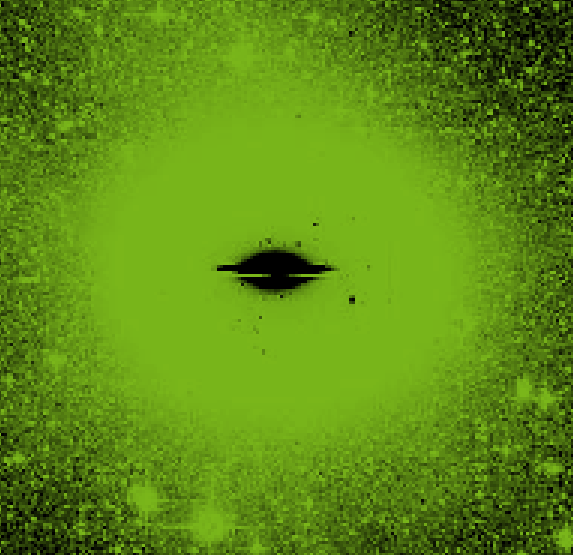

Once you have this modelling machinery in hand, you can go on to some very interesting things. One is study dark matter. What's dark matter? Well, here's a galaxy. Not special as galaxies go, just a pretty face.

|

|

The collage on the left is from Astronomy Pictures of the Day and it has several false colour images: in infrared, visible light, and x-rays, and a composite. On the right, is a picture in visible light but with a much longer exposure. The pretty galaxy is completely overexposed and here's it's replaced by a negative. And what we can see now is this very faint kind of halo of stars. These halo stars are presumably in orbit, in a gravitational field. But what's generating that gravitational field? It can't be the stars themselves, there's not enough mass in stars to do that -- otherwise the halo would have been much brighter. There has to be a lot of mass there, which we can't see. There are several things it could be, but nobody really knows yet, and meanwhile we just unimaginatively call it dark matter.

You can use gravitational lensing to map the dark halos around galaxies.

This animation is by Dominik's Leier -- summarizing his PhD thesis in six seconds, so I wouldn't try to read everything now -- but what Dominik and Ignacio Ferreras have done here is to step outwards through a sample of lensing galaxies and plot the mass in stars inside against the total mass inside.

We see that at first the total mass tracks the stellar mass, but then the total mass seems to break away and increase more than the stellar mass.

Another thing you can do with gravitational lenses is study the expanding universe.

By the way, if you like non-technical science books, try this one by two of our ETH colleagues.

I read it last summer and really learned a lot.

Here's an animation of the expanding universe. Actually, of two universes.

On the left we have what was thought --until 1998-- to be our universe: it's called the Einstein de Sitter model. We see it from a little after the Big Bang, expanding very fast at first, and then slowing down. The animation pauses for a couple of seconds at the present --- the pale blue dot is us --- and then continues into the future. On the right, we have what it now thought to be our Universe, and is full of red wine. It's usually called dark energy, which is a terribly misleading name, because it's not really energy at all. But since nobody knows what it is anyway, and one of the discoverers, Brian Schmidt, grows Pinot Noir in his spare time -- it might as well be Pinot Noir. And what this Pinot Noir does, is stop the deceleration of the universe and make it accelerate again.

Now let's have some fireworks go off in the universe.

These explosions are completely identical. They're called Type Ia supernovae --- but never mind, they're basically identical explosions. They're not simultaneous, but from the pale blue dot they look simultaneous, because they arrive at the same time. These red rims represent the wavelength, which expands with the universe. Now the observed wavelengths for each supernova are identical in both models. But in the Pinot Noir universe, the earlier explosion is older and further away. So though it takes up more space on the animation, it is observed as fainter. It's the brightness ratio of the supernovae that tells us we live in an accelerating universe.

You can then work out what lensing does in an expanding universe. We'll skip the animation and just quickly see some results.

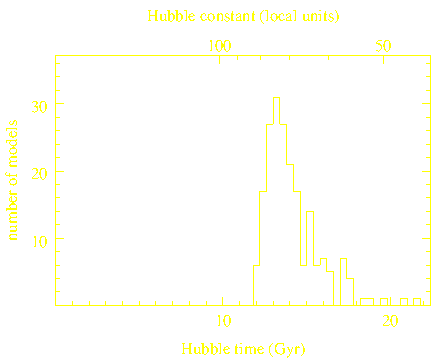

|

| |

| Jonathan Coles (2008) | Paraficz and Hjorth (2010) | |

| using 11 lenses | using 18 lenses |

Basically, the difference in light travel times lets you calculate the expansion time of the universe. Here Jonathan Coles gets an estimate of 13.5 billion years with an uncertainty of 15 percent. Paraficz and Hjorth plot the reciprocal of that quantity, the expansion rate. Their age estimate is bit higher, but agreeing within uncertainties.

Which is very nice, but you'd really like to get those uncertainties down. You want ten times as many lenses, or a hundred times if you can have them. Is that possible?

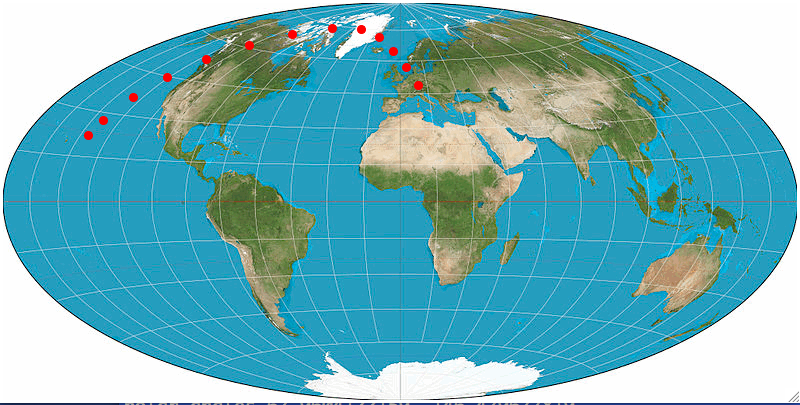

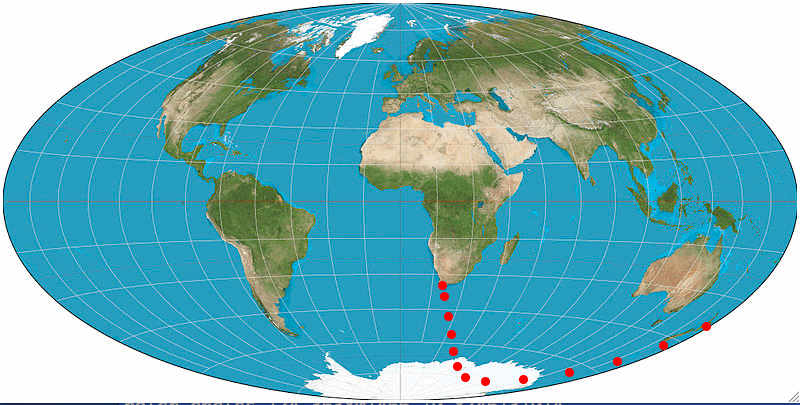

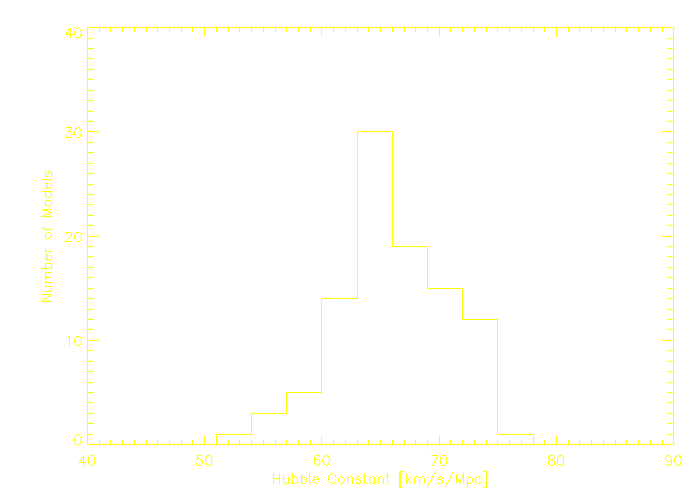

Here's a map of the sky with the known lenses shown on it. This kind of map is just a way of showing the whole sky at the same time.

That's the Milky Way, which is beautiful to look at but makes it difficult to see behind it, so not many lenses found there. But basically, there are lensing galaxies scattered all over the sky, you just have to take long exposures and look through them very carefully.

Two big deep-sky surveys DES and Pan-Starrs that are starting up now, would have thousands of lenses. And the Large Synoptic Survey Telescope, planned for the next decade, should see tens of thousands of lenses. And experiments with software searching show that computers can find the easy ones, but are not nearly as good as a trained human. It really needs a human with intuition looking through maybe a thousand big screenfuls to find one.

Five or six years ago, Kevin Schawinski, now our colleague at ETH but then at Oxford, and Chris Lintott, did a crazy experiment. They appealed to members of the public to help classify galaxy images. Now Chris Lintott is also a broadcaster, and I guess he carefully picked a slow news day to launch Galaxy Zoo, and somehow it went into the news at prime time. You can guess the rest of the story. But there's second part to the story, which is that with time the volunteers of Zooniverse started asking for more difficult things.

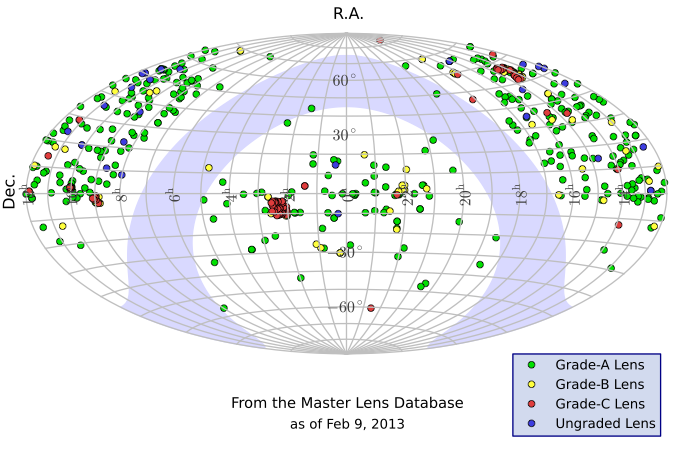

Here's one of several projects on now, which is transcribing manuscript fragments written in ancient Greek.

And now SpaceWarps is live!

Citizen science projects like zooniverse are not scientific heavyweights yet, but they are getting increasingly important. Some enthusiasts are saying they will change the way science is done.

In astronomy, amateurs have always played a role. Could they start playing a leading role in the future? I don't know, but to conclude today, I'd like to show you something really experimental. Thanks to Rafael Küng for letting me be the first to present it. It's platform for modelling gravitational lenses, The idea here is to sketch the arrival-time surface, identifying maxima, minima, saddle points. The machine will then take that sketch as input to make a model of the mass distribution of the lens. Then we can see the model and see if it looks reasonable.

While designed for SpaceWarps volunteers, it's all open development, so if a volunteer wants to get their hands dirty like a full-time researcher, they can go right ahead.